如何网络抓取理想主义者:综合指南(2023 年更新)

Raluca Penciuc on Mar 03 2023

Idealista is one of the leading real estate websites in Southern Europe, providing a wealth of information on properties for sale and rent. It’s available in Spain, Portugal, and Italy, listing millions of homes, rooms, and apartments.

For businesses and individuals looking to gain insights into the Spanish property market, the website can be a precious tool. Web scraping Idealista can help you extract this valuable information and use it in various ways such as market research, lead generation, and creating new business opportunities.

In this article, we will provide a step-by-step guide on how to scrape the website using TypeScript. We will cover the prerequisites, the actual scraping of properties data and how to improve the process, and why using a professional scraper is better than creating your own.

By the end of the article, you will have the knowledge and tools to extract data from Idealista and make good use of it for your business.

先决条件

Before we begin, let's ensure we have the necessary tools.

首先,从官方网站下载并安装 Node.js,确保使用长期支持 (LTS) 版本。这也将自动安装 Node Package Manager(NPM),我们将使用它来安装更多依赖项。

在本教程中,我们将使用 Visual Studio Code 作为集成开发环境 (IDE),但您也可以选择使用任何其他 IDE。为项目创建一个新文件夹,打开终端,运行以下命令建立一个新的 Node.js 项目:

npm init -y

这将在项目目录中创建package.json文件,其中将存储有关项目及其依赖项的信息。

接下来,我们需要安装 TypeScript 和 Node.js 的类型定义。TypeScript 提供可选的静态类型,有助于防止代码出错。为此,请在终端运行

npm install typescript @types/node --save-dev

您可以运行

npx tsc --version

TypeScript 使用名为tsconfig.json的配置文件来存储编译器选项和其他设置。要在项目中创建该文件,请运行以下命令:

npx tsc -init

Make sure that the value for “outDir” is set to “dist”. This way we will separate the TypeScript files from the compiled ones. You can find more information about this file and its properties in the official TypeScript documentation.

现在,在项目中创建一个 "src"目录和一个新的 "index.ts"文件。我们将在这里保存刮擦代码。要执行 TypeScript 代码,必须先编译它,因此为了确保我们不会忘记这个额外的步骤,我们可以使用自定义命令。

Head over to the “package.json” file, and edit the “scripts” section like this:

"scripts": {

"test": "npx tsc && node dist/index.js"

}这样,在执行脚本时,只需在终端中输入 "npm run test"即可。

Finally, to scrape the data from the website, we will use Puppeteer, a headless browser library for Node.js that allows you to control a web browser and interact with websites programmatically. To install it, run this command in the terminal:

npm install puppeteer

It is highly recommended when you want to ensure the completeness of your data, as many websites today contain dynamic-generated content. If you’re curious, you can check out before continuing the Puppeteer documentation to fully see what it’s capable of.

查找数据

Now that you have your environment set up, we can start looking at extracting the data. For this article, I chose to scrape the list of houses and apartments available in a region from Toledo, Spain: https://www.idealista.com/pt/alquiler-viviendas/toledo/buenavista-valparaiso-la-legua/.

We’re going to extract the following data from each listing on the page:

- the URL;

- the title;

- the price;

- the details (number of rooms, surface, etc.);

- the description

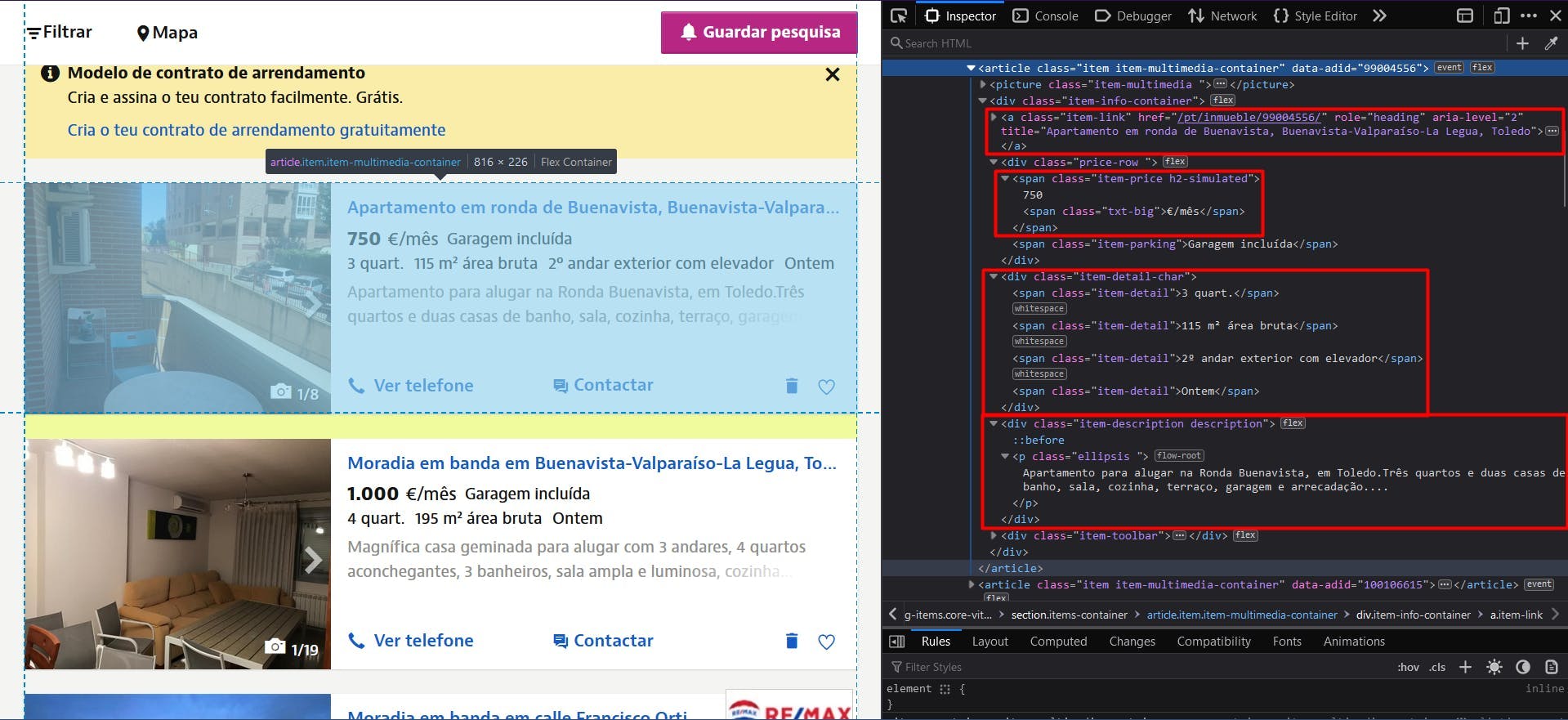

您可以在下面的截图中看到所有这些信息:

By opening the Developer Tools on each of these elements you will be able to notice the CSS selectors that we will use to locate the HTML elements. If you’re fairly new to how CSS selectors work, feel free to reach out to this beginner guide.

数据提取

To start writing our script, let’s verify that the Puppeteer installation went all right:

import puppeteer from 'puppeteer';

async function scrapeIdealistaData(idealista_url: string): Promise<void> {

// Launch Puppeteer

const browser = await puppeteer.launch({

headless: false,

args: ['--start-maximized'],

defaultViewport: null

})

// Create a new page

const page = await browser.newPage()

// Navigate to the target URL

await page.goto(idealista_url)

// Close the browser

await browser.close()

}

scrapeIdealistaData("https://www.idealista.com/pt/alquiler-viviendas/toledo/buenavista-valparaiso-la-legua/")

Here we open a browser window, create a new page, navigate to our target URL, and close the browser. For the sake of simplicity and visual debugging, I open the browser window maximized in non-headless mode.

Since all listings have the same structure and data, we can extract all of the information for the entire properties list in our algorithm. After running the script, we can loop through all of the results and compile them into a single list.

To get the URL of all the properties, we locate the anchor elements with the “item-link” class. Then we convert the result to a JavaScript array and map each element to the value of the “href” attribute.

// Extract listings location

const listings_location = await page.evaluate(() => {

const locations = document.querySelectorAll('a.item-link')

const locations_array = Array.from(locations)

return locations ? locations_array.map(a => a.getAttribute('href')) : []

})

console.log(listings_location.length, listings_location)

Then, for the titles, we can make use of the same anchor element, except that this time we will extract its “title” attribute.

// Extract listings titles

const listings_title = await page.evaluate(() => {

const titles = document.querySelectorAll('a.item-link')

const titles_array = Array.from(titles)

return titles ? titles_array.map(t => t.getAttribute('title')) : []

})

console.log(listings_title.length, listings_title)

For the prices, we locate the “span” elements having 2 class names: “item-price” and “h2-simulated”. It’s important to identify the elements as unique as possible, so you don’t alter your final result. It needs to be converted to an array as well and then mapped to its text content.

// Extract listings prices

const listings_price = await page.evaluate(() => {

const prices = document.querySelectorAll('span.item-price.h2-simulated')

const prices_array = Array.from(prices)

return prices ? prices_array.map(p => p.textContent) : []

})

console.log(listings_price.length, listings_price)

We apply the same principle for the property details, parsing the “div” elements with the “item-detail-char” class name.

// Extract listings details

const listings_detail = await page.evaluate(() => {

const details = document.querySelectorAll('div.item-detail-char')

const details_array = Array.from(details)

return details ? details_array.map(d => d.textContent) : []

})

console.log(listings_detail.length, listings_detail)

And finally, the description of the properties. Here we apply an extra regular expression to remove all the unnecessary newline characters.

// Extract listings descriptions

const listings_description = await page.evaluate(() => {

const descriptions = document.querySelectorAll('div.item-description.description')

const descriptions_array = Array.from(descriptions)

return descriptions ? descriptions_array.map(d => d.textContent.replace(/(\r\n|\n|\r)/gm, "")) : []

})

console.log(listings_description.length, listings_description)

Now you should have 5 lists, one for each piece of data we scraped. As I mentioned before, we should centralize them into a single one. This way, the information we gathered will be much easier to further process.

// Group the lists

const listings = []

for (let i = 0; i < listings_location.length; i++) {

listings.push({

url: listings_location[i],

title: listings_title[i],

price: listings_price[i],

details: listings_detail[i],

description: listings_description[i]

})

}

console.log(listings.length, listings)

最终结果应该是这样的

[

{

url: '/pt/inmueble/99004556/',

title: 'Apartamento em ronda de Buenavista, Buenavista-Valparaíso-La Legua, Toledo',

price: '750€/mês',

details: '\n3 quart.\n115 m² área bruta\n2º andar exterior com elevador\nOntem \n',

description: 'Apartamento para alugar na Ronda Buenavista, em Toledo.Três quartos e duas casas de banho, sala, cozinha, terraço, garagem e arrecadação....'

},

{

url: '/pt/inmueble/100106615/',

title: 'Moradia em banda em Buenavista-Valparaíso-La Legua, Toledo',

price: '1.000€/mês',

details: '\n4 quart.\n195 m² área bruta\nOntem \n',

description: 'Magnífica casa geminada para alugar com 3 andares, 4 quartos aconchegantes, 3 banheiros, sala ampla e luminosa, cozinha totalmente equipa...'

},

{

url: '/pt/inmueble/100099977/',

title: 'Moradia em banda em calle Francisco Ortiz, Buenavista-Valparaíso-La Legua, Toledo',

price: '800€/mês',

details: '\n3 quart.\n118 m² área bruta\n10 jan \n',

description: 'O REMAX GRUPO FV aluga uma casa mobiliada na Calle Francisco Ortiz, em Toledo.Moradia geminada com 148 metros construídos, distribuídos...'

},

{

url: '/pt/inmueble/100094142/',

title: 'Apartamento em Buenavista-Valparaíso-La Legua, Toledo',

price: '850€/mês',

details: '\n4 quart.\n110 m² área bruta\n1º andar exterior com elevador\n10 jan \n',

description: 'Apartamento muito espaçoso para alugar sem móveis, cozinha totalmente equipada.Composto por 4 quartos, 1 casa de banho, terraço.Calefaç...'

}

]

绕过僵尸检测

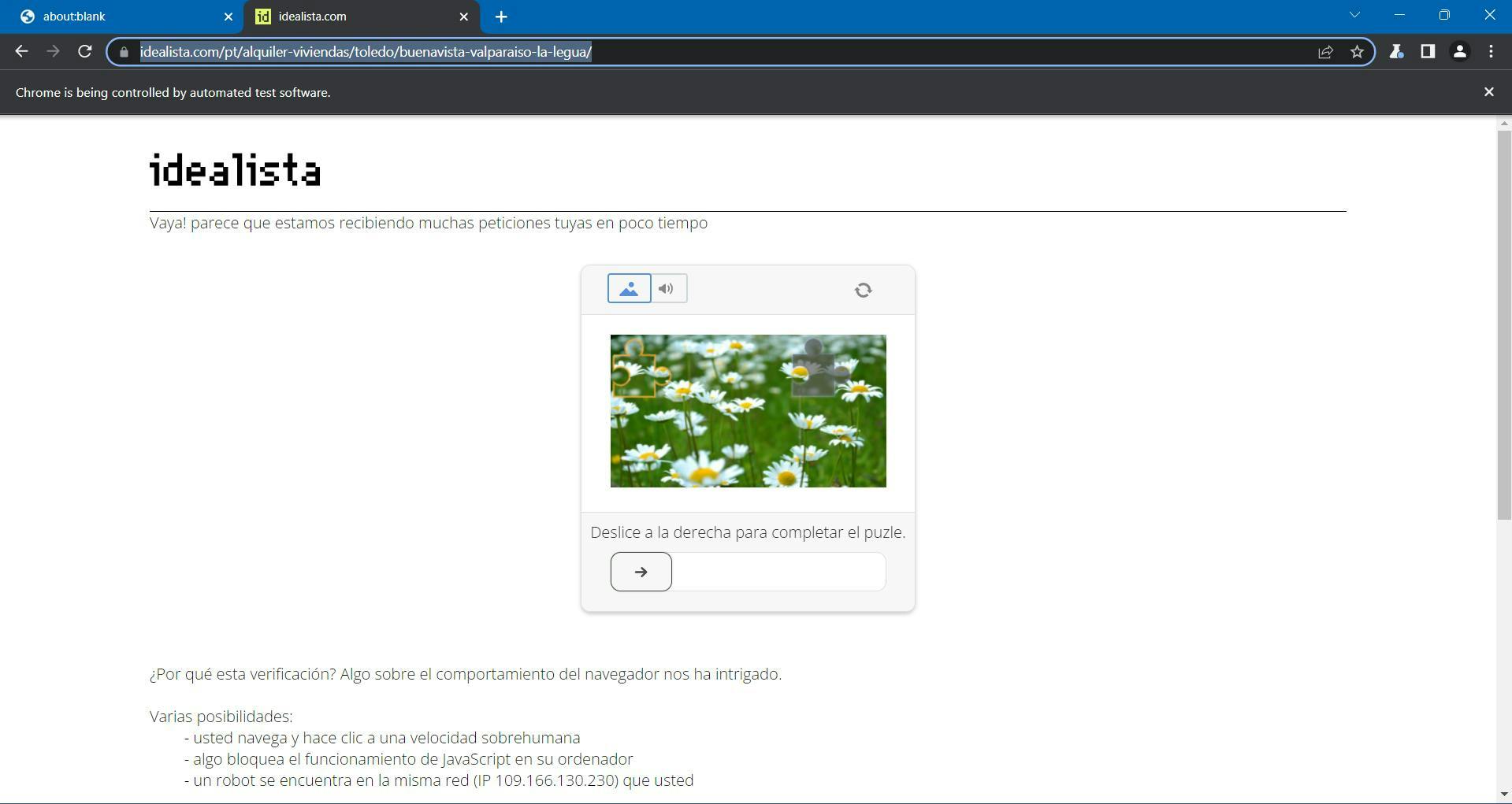

If you run your script at least 2 times during the course of this tutorial, you may have already noticed this annoying page:

Idealista uses DataDome as its antibot protection, which incorporates a GeeTest CAPTCHA challenge. You’re supposed to move the piece of the puzzle until the image is complete, and then you should be redirected back to your target page.

You can easily pause your Puppeteer script until you solve the challenge using this code:

await page.waitForFunction(() => {

const pageContent = document.getElementById('main-content')

return pageContent !== null

}, {timeout: 10000})This tells our script to wait 10 seconds for a specified CSS selector to appear in the DOM. It should be enough for you to solve the CAPTCHA and then let the navigation to complete.

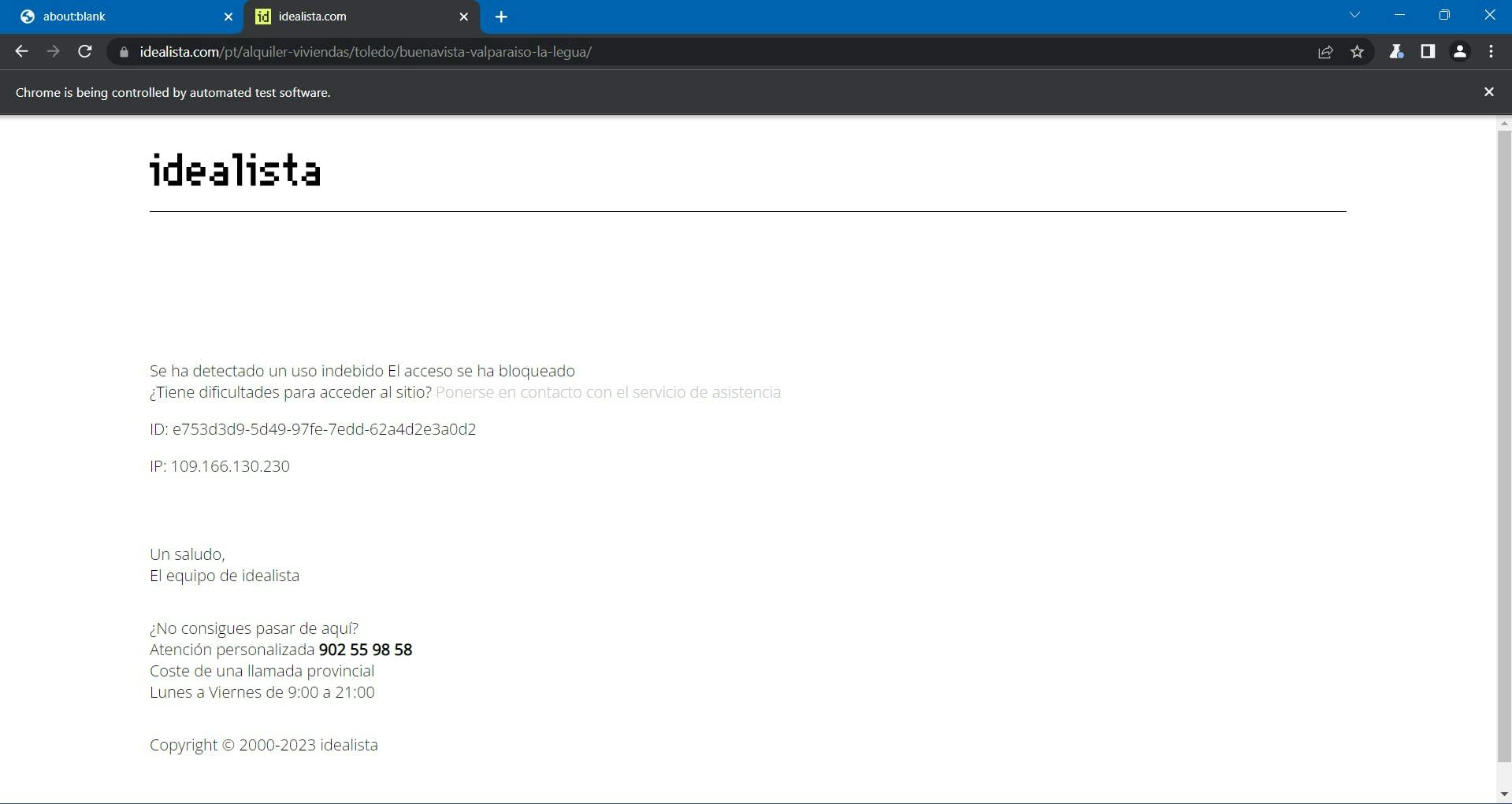

…Unless the Idealista page will block you anyway.

At this point, the process became more complex and challenging, and you didn’t even scale up your project.

As I mentioned before, Idealista is protected by DataDome. They collect multiple browser data to generate and associate you with a unique fingerprint. If they are suspicious, you receive the CAPTCHA challenge above, which is pretty difficult to automatically solve.

在收集到的浏览器数据中,我们发现

- Navigator 对象的属性(deviceMemory、hardwareConcurrency、languages、platform、userAgent、webdriver 等)。

- 时间和性能检查

- WebGL

- WebRTC IP sniffing

- recording mouse movements

- inconsistencies between the User-Agent and your operating system

- and many more.

One way to overcome these challenges and continue scraping at a large scale is to use a scraping API. These kinds of services provide a simple and reliable way to access data from websites like Idealista.com, without the need to build and maintain your own scraper.

WebScrapingAPI 就是这样一款产品。它的代理旋转机制完全避免了验证码,其扩展知识库可以随机化浏览器数据,使其看起来像真实用户。

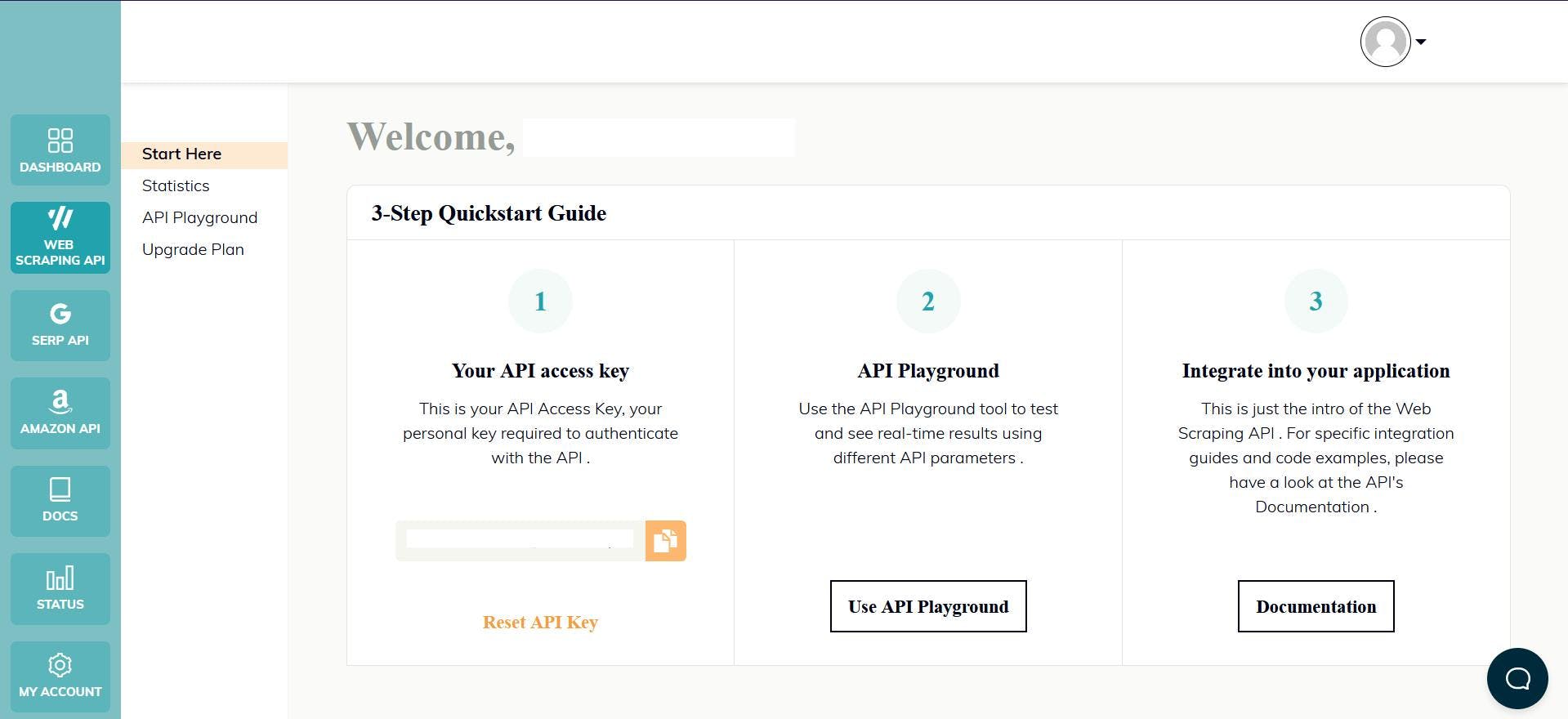

设置简单快捷。你只需注册一个账户,就会收到 API 密钥。您可以在仪表板上访问该密钥,它用于验证您发送的请求。

由于您已经设置了 Node.js 环境,我们可以使用相应的 SDK。运行以下命令将其添加到项目依赖项中:

npm install webscrapingapi

现在只需根据 API 调整之前的 CSS 选择器即可。提取规则的强大功能使我们可以在不做重大修改的情况下解析数据。

import webScrapingApiClient from 'webscrapingapi';

const client = new webScrapingApiClient("YOUR_API_KEY");

async function exampleUsage() {

const api_params = {

'render_js': 1,

'proxy_type': 'residential',

'timeout': 60000,

'extract_rules': JSON.stringify({

locations: {

selector: 'a.item-link',

output: '@href',

all: '1'

},

titles: {

selector: 'a.item-link',

output: '@title',

all: '1'

},

prices: {

selector: 'span.item-price.h2-simulated',

output: 'text',

all: '1'

},

details: {

selector: 'div.item-detail-char',

output: 'text',

all: '1'

},

descriptions: {

selector: 'div.item-description.description',

output: 'text',

all: '1'

}

})

}

const URL = "https://www.idealista.com/pt/alquiler-viviendas/toledo/buenavista-valparaiso-la-legua/"

const response = await client.get(URL, api_params)

if (response.success) {

// Group the lists

const listings = []

for (let i = 0; i < response.response.data.locations.length; i++) {

listings.push({

url: response.response.data.locations[i],

title: response.response.data.titles[i],

price: response.response.data.prices[i],

details: response.response.data.details[i],

description: response.response.data.descriptions[i].replace(/(\r\n|\n|\r)/gm, "")

})

}

console.log(listings.length, listings)

} else {

console.log(response.error.response.data)

}

}

exampleUsage();

结论

In this article, we have shown you how to scrape Idealista, a popular Spanish real estate website, using TypeScript and Puppeteer. We've gone through the process of setting up the prerequisites and scraping the data, and we discussed some ways to improve the code.

Web scraping Idealista can provide valuable information for businesses and individuals. By using the techniques outlined in this article, you can extract data such as property URLs, prices, and descriptions from the website.

Additionally, If you want to avoid the antibot measures and the complexity of the scraping process, using a professional scraper can be more efficient and reliable than creating your own.

By following the steps and techniques outlined in this guide, you can unlock the power of web scraping Idealista and use it to support your business needs. Whether it's for market research, lead generation, or creating new business opportunities, web scraping Idealista can help you stay ahead of the competition.

相关文章

了解如何将代理与流行的 JavaScript HTTP 客户端 node-fetch 结合使用,以构建网络搜刮程序。了解代理在网络搜刮中的工作原理,将代理与 node-fetch 集成,并构建一个支持代理的网络搜刮程序。