The Ultimate Guide to Web Scraping With C++

Raluca Penciuc on Jul 05 2021

Two million years ago, a bunch of cavemen figured that a rock on a stick could be useful. Things only got a bit out of hand from there. Now, our problems are less about running away from saber-toothed tigers and more about when teammates name their commits “did sutff.”

While the difficulties changed, our obsession with creating tools to overcome them hasn’t. Web scrapers are a testament to that. They’re digital tools designed to solve digital problems.

Today we’re going to build a new tool from scratch, but instead of rocks and sticks, we’ll use C++, which is arguably harder. So, stay tuned to learn how to make a C++ web scraper and how to use it!

Understanding web scraping

Regardless of which programming language you choose, you need to understand how web scrapers work. This knowledge is paramount to writing the script and having a functional tool at the end of the day.

The benefits of web scraping

It’s not hard to imagine that a bot doing all your research for you is a lot better than copying information by hand. The advantages grow exponentially if you need large amounts of data. If you’re training a machine learning algorithm, for example, a web scraper can save you months of work.

For the sake of clarity, let’s go over every way in which web scrapers help you:

- Spend less time spent on tedious work — this one is the most apparent benefit. Scrapers extract data as fast as it would take your browser to load the page. Give the bot a list of URLs, and you instantly get their content.

- Gain higher data accuracy — human hands inevitably lead to human error. In comparison, the bot will always pull all the information, exactly how it’s presented, and parse it according to your instructions.

- Keep an organized database — as you gather data, it can become harder and harder to keep track of it all. The scraper doesn’t only get content but also stores it in whichever format makes your life easier.

- Use up-to-date information — in many situations, data changes from one day to the next. For an example, just look at stock markets or online shop listings. With a few lines of code, your bot can scrape the same pages at fixed intervals and update your database with the latest info.

WebScrapingAPI exists to bring you all those benefits while also navigating around all the different roadblocks scrapers often encounter on the web. All this sounds great, but how do they translate into actual projects?

Web scraping use cases

There are plenty of different ways to use web scrapers. The sky’s the limit! Still, some use cases are more common than others.

Let's go into more details on each situation:

- Search engine optimization — Scraping search results pages for your keywords is an easy and fast way to determine who you need to surpass in ranking and what kind of content can help you do it.

- Market research — After checking how your competitors are doing in searches, you can go further and analyze their whole digital presence. Use the web scraper to see how they use PPC, describe their products, what kind of pricing model they use, and so on. Basically, any public information is at your fingertips.

- Brand protection — People out there are talking about your brand. Ideally, it’s all good things, but that’s not a guarantee. A bot is well equipped to navigate review sites and social media platforms and report to you whenever your brand is mentioned. Whatever they’re saying, you’ll be ready to represent your brand’s interests.

- Lead generation — Bridging the gap between your sales team and possible clients on the Internet is seldom easy. Well, it becomes a whole lot easier once you use a web scraping bot to gather contact information in online business registries like the Yellow Pages or Linkedin.

- Content marketing — Creating high-quality content takes a lot of work, no doubt about that. You not only have to find credible sources and a wealth of information but also learn the kind of tone and approach your audience will like. Luckily, those steps become trivial if you have a bot to do the heavy lifting.

There are plenty of other examples out there. Of course, you could also develop an entirely new way to use data extraction to your advantage. We always encourage the creative use of our API.

The Challenges of web scraping

As valuable as web scrapers are, it’s not all sunshine and rainbows. Some websites don’t like being visited by bots. It’s not difficult to program your scraper to avoid putting a serious strain on the visited website, but their vigilance against bots is warranted. After all, the site might not be able to tell the difference between a well-mannered bot and a malicious one.

As a result, there are a few challenges that scrapers run into while out and about.

The most problematic hurdles are:

- IP blocks — There are several ways to determine if a visitor is a human or machine. The course of action after identifying a bot, however, is much simpler - IP blocking. While this doesn’t often happen if you take the necessary precautions, it’s still a good idea to have a proxy pool prepared so that you can continue extracting data even if one IP is banned.

- Browser fingerprinting — Every request that you send to a website also gives away some of your information. The IP is the most common example, but request headers also tell the site about your operating system, browser, and other small details. If a website uses this information to identify who is sending the requests and realizes that you’re browsing way faster than a human should, you can expect to get promptly blocked.

- Javascript rendering — Most modern websites are dynamic. That is to say, they use javascript code to customize pages for the user and offer a more tailored experience. The problem is that simple bots get stuck on that code since they can’t execute it. The result: the scraper retrieves javascript instead of the intended HTML that holds relevant information.

- Captchas — When websites aren’t sure if a visitor is a human or a bot, they’ll redirect them to a Captcha page. To us, it’s a mild annoyance at best, but for bots, it’s usually a complete stop; unless they have Captcha solving scripts included.

- Request throttling — The simplest way to keep visitors from overwhelming the website’s server is to limit the number of requests a single IP can send in a fixed time. After that number is reached, the scraper may have to wait out the timer, or it might have to solve a Captcha. Either way, it’s not good for your data extraction project.

Understanding the web

If you’re to build a web scraper, you should know a few key concepts of how the Internet works. After all, your bot will not only use the Internet to gather data, but it also has to blend in with the other billions of users.

We like to think of websites like books, and reading at a page only means flipping to the correct number and looking. In reality, it’s more akin to telegrams sent between you and the book. You request a page, and it’s sent to you. Then you order the next one, and the same thing happens.

All this back and forth happens through HTTP, or Hypertext Transfer Protocol. For you, the visitor, to see a page, your browser sends an HTTP request and receives an HTTP response from the server. The content you want will be included in that response.

HTTP requests are made up of four components:

- The URL (Uniform Resource Locator) is the address you’re sending requests to. It leads to a unique resource that can be a whole web page, a single file, or anything in between.

- The Method conveys what kind of action you want to take. The most common method is GET, which retrieves data from the server. Here’s a list of the most common methods.

- The Headers are bits of metadata that give shape to your request. For example, if you have to provide a password or credentials to access data, they go in their special header. If you want to receive data in JSON format specifically, you mention that in a header. User-Agent is one of the best-known headers in web scraping because websites use it to fingerprint visitors.

- The Body is the general-purpose part of the request that stores data. If you’re getting content, this will probably be empty. On the other hand, when you want to send information to the server, it’ll be found here.

After sending the request, you’ll get a response even if the request failed to receive any data. The structure looks like this:

- The Status Code is a three-digit code that tells you at a glance what happened. In short, a code starting with ‘2’ usually means success, and one starting with ‘4’ signals failure.

- The Headers have the same function as their counterparts. For example, if you receive a specific type of file, a header will tell you the format.

- The Body is present as well. If you requested content with GET and it succeeds, you’ll find the data here.

REST APIs use HTTP protocols, much like web browsers. So, if you decide that C++ web scrapers are too much work, this info will also be helpful for you if you choose to use WebScrapingAPI, which already handles all data extraction challenges.

Understanding C++

In terms of programming languages, you can’t go much more old-school than C and C++. The language appeared in 1985 as the perfected version of “C with classes.”

While C++ is used for general-purpose programming, it’s not really the first language one would consider for a web scraper. It has some disadvantages, but the idea is not without its merits. Let’s explore them, shall we?

C++ is object-oriented, meaning that it uses classes, data abstraction, and inheritance to make your code easier to reuse and repurpose for different needs. Since data is treated as an object, it’s easier for you to store and parse it.

Plenty of developers know at least a bit of C++. Even if you didn’t learn about it in uni, you (or a teammate) probably know a bit of C++. That extends to the whole software development community, so it won’t be hard for you to get a second opinion on the code.

C++ is highly scalable. If you start with a small project and decide that web scraping is for you, most of the code is reusable. A few tweaks here and there, and you’ll be ready for much larger data volumes.

On the other hand, C++ is a static programming language. While this ensures better data integrity, it’s not as helpful as dynamic languages when dealing with the Internet.

Also, C++ isn’t well suited for building crawlers. This may not be a problem if you only want a scraper. But if you’re going to add a crawler to generate URL lists, C++ isn’t a good choice.

Ok, we talked about many important things, but let’s get to the meat of the article — coding a web scraper in C++.

Building a web scraper with C++

Prerequisites

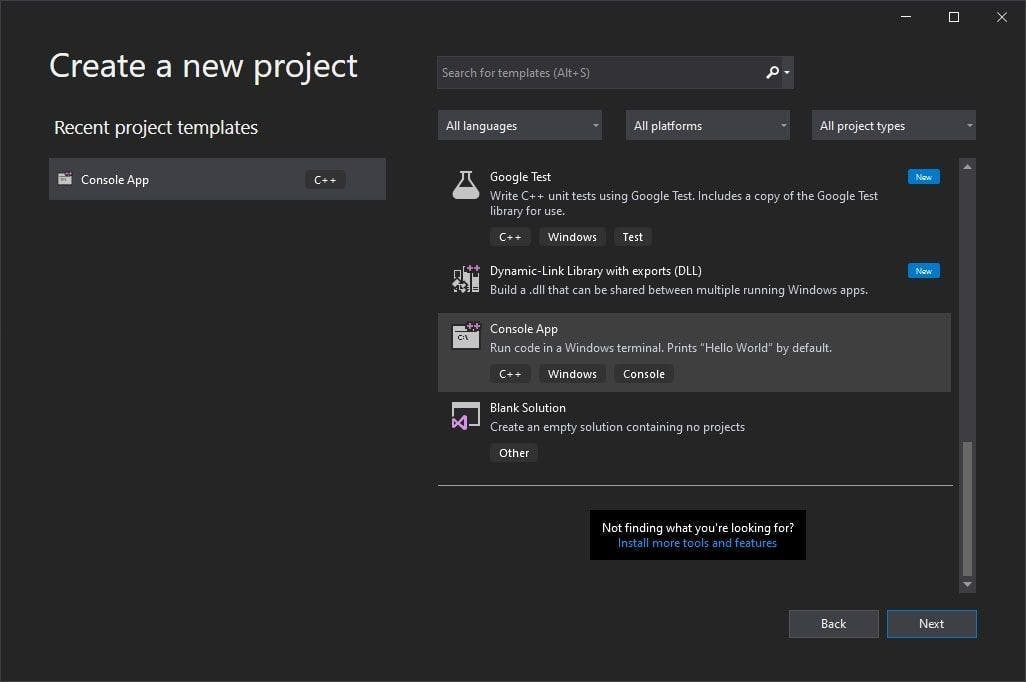

- C++ IDE. In this guide, we will use Visual Studio.

- vcpkg is a C/C++ package manager created and sustained by Windows

- cpr is a C/C++ library for HTTP requests, built as a wrapper for the classic cURL and inspired by the Python requests library.

- gumbo is an HTML parser entirely written in C, which provides wrappers for multiple programming languages, including C++.

Setup the environment

1. After downloading and installing Visual Studio, create a simple project using a Console App template.

2. Now we will configure our package manager. Vcpkg provides a well-written tutorial to get you started as quickly and fast as possible.

Note: when the installation is done, it would be helpful to set an environment variable so that you can run vcpkg from any location on your computer.

3. Now it’s time to install the libraries we need. If you set an environment variable, then open any terminal and run the following commands:

> vcpkg install cpr

> vcpkg install gumbo

> vcpkg integrate install

If you didn’t set an environment variable, simply navigate to the folder where you installed vcpkg, open a terminal window and run the same commands.

The first two commands install the packages we need to build our scraper, and the third one helps us integrate the libraries in our project effortlessly.

Pick a website and inspect the HTML

And now we are all set! Before building the web scraper, we need to pick a website and inspect its HTML code.

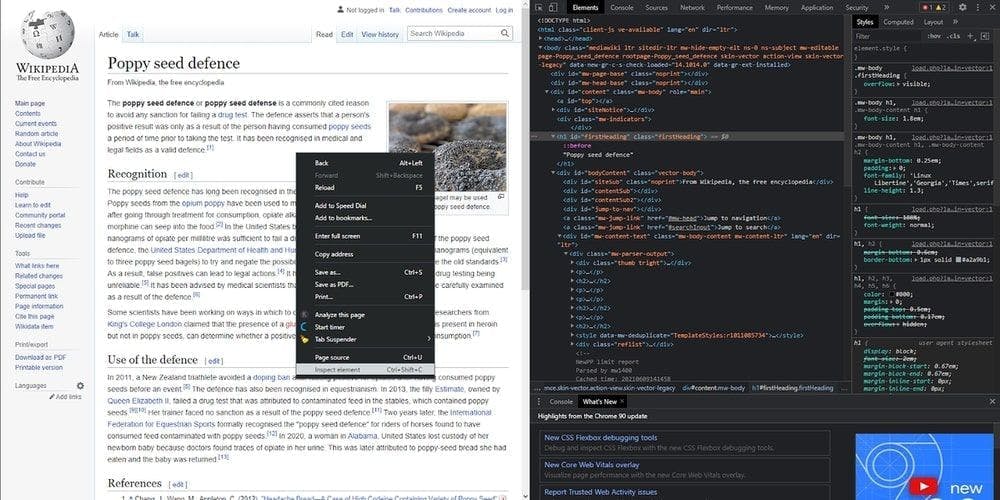

We went to Wikipedia and picked a random page from the “Did you know …” section. So, today’s scraped page will be the Wikipedia article about the poppy seed defense, and we will extract some of its components. But first, let’s take a look at the page structure. Right-click anywhere on the article, then on “Inspect element” and voila! The HTML is ours.

Extract the title

Now we can begin writing the code. To extract the information, we have to download the HTML locally. First, import the libraries we just downloaded:

#include <iostream>

#include <fstream>

#include "cpr/cpr.h"

#include "gumbo.h"

Then we make an HTTP request to the target website to retrieve the HTML.

std::string extract_html_page()

{

cpr::Url url = cpr::Url{"https://en.wikipedia.org/wiki/Poppy_seed_defence"};

cpr::Response response = cpr::Get(url);

return response.text;

}

int main()

{

std::string page_content = extract_html_page();

}

The page_content variable now holds the article’s HTML, and we will further use it to extract the data we need. This is where the gumbo library comes in handy.

We use the gumbo_parse method to convert the previous page_content string to an HTML tree, and then we call our implemented function search_for_title for the root node.

int main()

{

std::string page_content = extract_html_page();

GumboOutput* parsed_response = gumbo_parse(page_content.c_str());

search_for_title(parsed_response->root);

// free the allocated memory

gumbo_destroy_output(&kGumboDefaultOptions, parsed_response);

}

The called function will traverse the HTML tree in-depth to look for the <h1> tag by making recursive calls. When it finds the title, it’s displaying it in the console and will exit the execution.

void search_for_title(GumboNode* node)

{

if (node->type != GUMBO_NODE_ELEMENT)

return;

if (node->v.element.tag == GUMBO_TAG_H1)

{

GumboNode* title_text = static_cast<GumboNode*>(node->v.element.children.data[0]);

std::cout << title_text->v.text.text << "\n";

return;

}

GumboVector* children = &node->v.element.children;

for (unsigned int i = 0; i < children->length; i++)

search_for_title(static_cast<GumboNode*>(children->data[i]));

}

Extract the links

The same main principle is applied for the rest of the tags, traversing the tree and getting what we are interested in. Let’s get all the links and extract their href attribute.

void search_for_links(GumboNode* node)

{

if (node->type != GUMBO_NODE_ELEMENT)

return;

if (node->v.element.tag == GUMBO_TAG_A)

{

GumboAttribute* href = gumbo_get_attribute(&node->v.element.attributes, "href");

if (href)

std::cout << href->value << "\n";

}

GumboVector* children = &node->v.element.children;

for (unsigned int i = 0; i < children->length; i++)

{

search_for_links(static_cast<GumboNode*>(children->data[i]));

}

}

See? Pretty much the same code, except for the tag we are looking for. The gumbo_get_attribute method can extract any attribute we name. Therefore you can use it to look for classes, IDs, etc.

It’s essential to null check the value of the attribute before displaying it. In high-level programming languages, this is unnecessary, as it will simply display an empty string, but in C++, the program will crash.

Write to CSV file

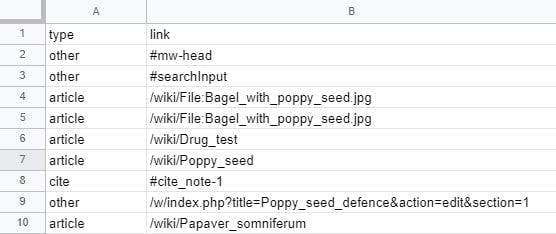

There are many links out there, all mixed throughout the content. And this was a really short article, too. So let’s save them all externally and see how we can make a distinction between them.

First, we create and open a CSV file. We do this outside the function, right near the imports. Our function is recursive, meaning that we will have A LOT of files if it creates a new file every time it’s called.

std::ofstream writeCsv("links.csv");Then, in our main function, we write the first row of the CSV file right before calling the function for the first time. Do not forget to close the file after the execution is done.

writeCsv << "type,link" << "\n";

search_for_links(parsed_response->root);

writeCsv.close();

Now, we write its content. In our search_for_links function, when we find a <a> tag, instead of displaying in the console now we do this:

if (node->v.element.tag == GUMBO_TAG_A)

{

GumboAttribute* href = gumbo_get_attribute(&node->v.element.attributes, "href");

if (href)

{

std::string link = href->value;

if (link.rfind("/wiki") == 0)

writeCsv << "article," << link << "\n";

else if (link.rfind("#cite") == 0)

writeCsv << "cite," << link << "\n";

else

writeCsv << "other," << link << "\n";

}

}

We take the href attribute value with this code and put it in 3 categories: articles, citations, and the rest.

Of course, you can go much further and define your own link types, like those that look like an article but are actually a file, for example.

Web scraping alternatives

Now you know how to build your own web scraper with C++. Neat, right?

Still, you may have told yourself at some point “This is starting to be more trouble than it’s worth!”, and we wholeheartedly understand. Truth be told, coding a scraper is an excellent learning experience, and the programs are suitable for small sets of data, but that’s about it.

A web scraping API is your best option if you need a fast, reliable, and scalable data extraction tool. That’s because it comes with all the functionalities you need, like a rotating proxy pool, Javascript rendering, Captcha solvers, geolocation options, and many more.

Don’t believe me? Then you should see for yourself! Start your free WebScrapingAPI trial and find out just how accessible web scraping can be!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Learn what’s the best browser to bypass Cloudflare detection systems while web scraping with Selenium.

Discover how to efficiently extract and organize data for web scraping and data analysis through data parsing, HTML parsing libraries, and schema.org meta data.

Are XPath selectors better than CSS selectors for web scraping? Learn about each method's strengths and limitations and make the right choice for your project!